AI Bias Explained: Why Algorithms Aren’t as Fair as You Think

- 2d

- 9 min read

What if an algorithm decided whether you got a job interview, or labeled you as a potential criminal, without you ever knowing why? The unsettling truth is, it’s already happening.

AI bias is when artificial intelligence systems consistently produce unfair results that favor or disadvantage certain groups due to flawed data, algorithms, or usage context.

As AI quietly shapes hiring, healthcare, finance, and even criminal justice, its decisions can carry invisible prejudice with very visible consequences. Addressing AI bias isn’t just a technical challenge, it’s a societal one, with the power to reinforce inequality or redefine fairness in the digital age.

What You Will Learn in This Article

Understanding Bias in AI: More Than Just a Tech Glitch

When people talk about AI bias, they’re not referring to a machine suddenly developing opinions about your favorite sports team.

Bias in this context means a consistent and unfair skew in the way an algorithm processes data or makes decisions. It’s not just a glitch, it’s a pattern, and it can be surprisingly stubborn to fix.

What AI Bias Really Means

In simple terms, bias happens when the AI’s output favors one outcome or group over another in ways that aren’t justified by the facts. This favoritism can creep in from several directions.

The Role of Flawed Training Data

Training data is a big one: if the dataset is incomplete, unbalanced, or full of historical prejudice, the AI will “learn” those patterns as if they were truth.

When Algorithms Themselves Build in Bias

The algorithm design itself can also hard-wire bias, if the math prioritizes speed over thoroughness, for instance, it may cut corners that disproportionately harm certain groups.

Deployment Context: Right Model, Wrong Place

And let’s not forget deployment context, even a well-built model can produce biased results if it’s used in situations it wasn’t designed for.

Real-World Cases That Prove the Problem

The real-world examples are what make this less abstract and more urgent. Facial recognition systems, for instance, have shown significantly higher error rates when identifying people with darker skin tones compared to lighter-skinned individuals.

Job-screening algorithms have been caught favoring male candidates because historical hiring data tilted in that direction. These aren’t harmless quirks; they’re systemic issues that can affect careers, freedoms, and livelihoods.

How AI Learns the Wrong Lessons: Where Bias Creeps In

Here’s the thing: algorithms aren’t born biased. They pick it up, like bad habits, from their environment. And that environment is almost always the data we feed them.

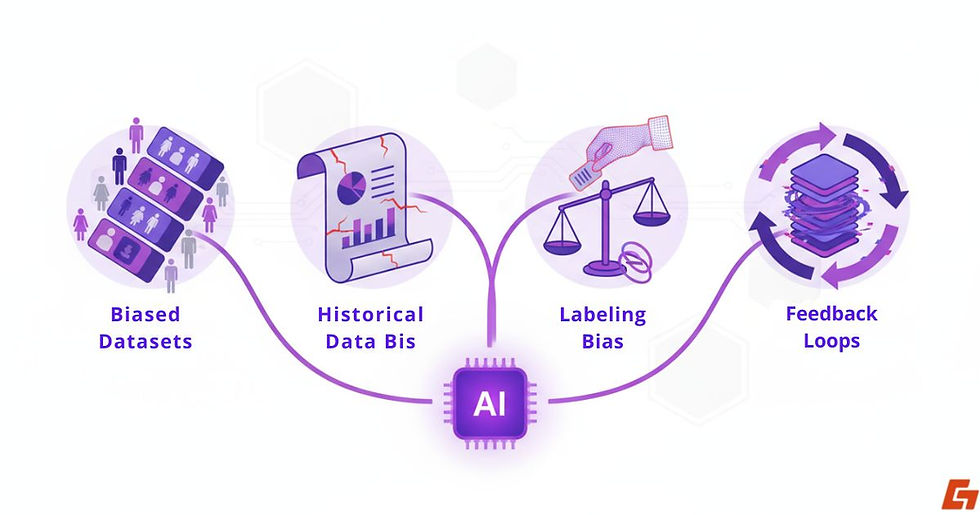

Biased Datasets: The Root of Skewed Decisions

Biased datasets are the most obvious culprit. If an AI model is trained on data that underrepresents certain groups, or overrepresents others, it will inevitably learn skewed rules.

This is why a medical AI trained mostly on data from middle-aged men might perform poorly when diagnosing women or younger patients.

Historical Data: Inheriting Our Past Mistakes

Then there’s the weight of historical data issues. AI learns from the past, but history is riddled with human prejudice.

Feed it hiring data from decades of male-dominated industries and it may quietly assume men are more “qualified” for leadership roles. The model isn’t making a moral judgment, it’s just parroting patterns from the past.

Labeling Bias: Human Assumptions in Disguise

Labeling bias adds another twist. Much of AI training depends on human-annotated datasets, and humans bring their own unconscious assumptions into the labeling process.

That’s how you end up with, say, crime prediction models that associate certain neighborhoods with higher risk, not because of objective data, but because of biased labeling.

Feedback Loops: How Bias Reinforces Itself

And then there are feedback loops, arguably the most dangerous. A biased model produces biased outputs, those outputs get fed back into the system as “new data,” and the bias compounds over time.

It’s like a rumor in a small town: once it starts, it’s almost impossible to stop without direct intervention.

Why AI Safety Is Bigger Than Just Bias

We tend to talk about AI bias as if it’s the whole story, but it’s not. Bias is just one piece of a much larger safety puzzle.

Model Unpredictability: The Black Box Problem

Many modern AI systems, especially large neural networks, are what researchers call “black boxes.” We can see the inputs and outputs, but the decision-making process in between is murky.

That opacity means even unbiased models can make baffling or harmful decisions, sometimes referred to as “hallucinations” in generative AI.

Adversarial Attacks: Outsmarting the Machine

Then there are adversarial attacks, deliberate attempts to mislead AI models by feeding them subtly altered inputs.

A single pixel change in an image can trick a vision system into thinking a stop sign is a speed limit sign. Bias won’t matter much if the model can be tricked that easily.

Unintended Consequences: Good Intentions, Bad Outcomes

And of course, there’s the realm of unintended consequences. A health AI designed to optimize hospital efficiency might reduce patient wait times, but also inadvertently encourage shorter consultations that miss critical diagnoses.

The harm wasn’t intentional, but the outcome is still damaging.

Fairness Isn’t Enough Without Reliability

Trust in AI doesn’t hinge only on fairness. It also depends on reliability, transparency, and resilience against manipulation.

Without those, even a bias-free system might not be safe to use in critical areas like healthcare, finance, or criminal justice.

The Real-World Consequences of AI Bias

It’s easy to treat AI bias like a technical bug on a developer’s to-do list, but the truth is it has very real, very human consequences.

Finance: When Algorithms Decide Who Gets a Loan

Take finance, for example. If an algorithm evaluating loan applications leans on biased historical data, entire groups can find themselves consistently denied credit or offered worse interest rates.

That’s not just a spreadsheet problem, it’s people losing chances to buy homes, start businesses, or invest in education.

Healthcare: Misdiagnosis and Missed Opportunities

Healthcare has its own version of this. A diagnostic AI trained mostly on data from certain ethnic groups may misinterpret symptoms in underrepresented populations.

The result? Misdiagnosis, delayed treatment, and even exclusion from clinical trials that could have been life-saving.

Hiring: The Invisible Filter on Resumes

In hiring, bias in AI systems can act like an invisible filter. Resumes from candidates with certain names or education backgrounds might never reach a human recruiter, all because the model “learned” those profiles were less successful in the past.

Criminal Justice: Flawed Risk Assessments with Lasting Impact

In criminal justice, risk assessment tools have shown patterns of labeling minority defendants as higher-risk, leading to harsher bail conditions or sentencing recommendations.

The scary part is that these decisions often get presented as objective and data-driven, when in fact they’re quietly shaped by flawed assumptions.

Spotting the Problem: How We Detect AI Bias

If we want to fix the problem, first we have to see it clearly and that’s not always straightforward. AI systems can be complex, and bias isn’t something you can just spot with the naked eye.

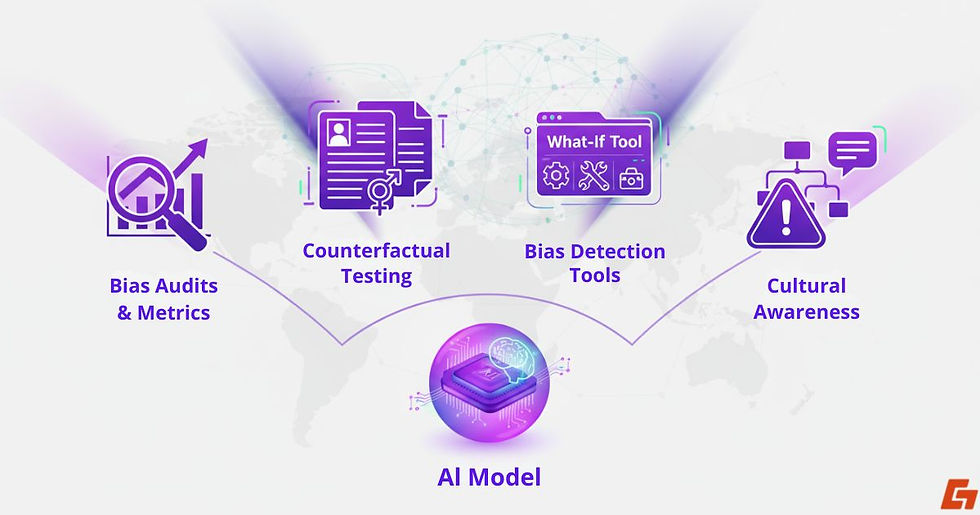

Bias Audits and Fairness Metrics

That’s why researchers use bias audits and fairness metrics to measure how different groups are affected by an algorithm’s decisions.

Metrics like demographic parity or disparate impact can reveal if one group consistently gets worse outcomes than another.

Counterfactual Testing: Changing One Detail at a Time

Another method is counterfactual testing, asking, “Would this decision change if we altered just one sensitive attribute?”

For example, if a loan approval flips from “yes” to “no” simply because the applicant’s gender was changed in the data, you’ve found a clear signal of bias.

Specialized Tools for Bias Detection

There are also specialized tools built to help spot these patterns. IBM’s AI Fairness 360 toolkit and Google’s What-If Tool are two examples that let teams visualize how their models treat different subgroups.

These tools don’t just measure; they help explain where bias might be creeping in, giving developers a clearer path toward correction.

The Cultural Challenge Behind Detection

But even with advanced detection, the challenge is cultural as much as technical.

Organizations need to be willing to ask uncomfortable questions about their own data and decision-making processes. Without that openness, AI bias will stay hidden in plain sight.

How to Reduce AI Bias Before It Causes Harm

Here’s where things get tricky: reducing AI bias isn’t about flipping a single switch. It’s a process, one that starts long before a model ever goes live.

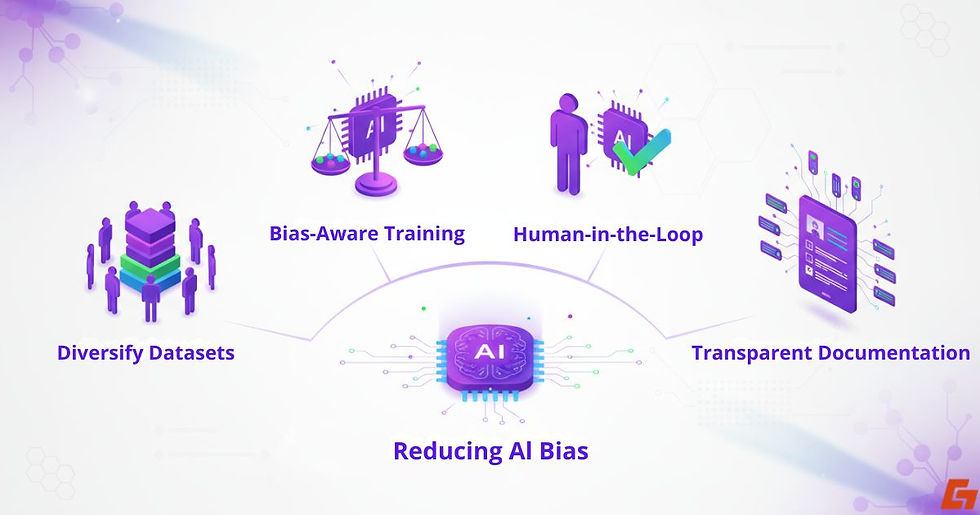

Diversifying Datasets: Filling the Gaps

The most obvious step is diversifying datasets. If the data reflects a wider range of demographics, contexts, and experiences, the AI has a better shot at producing fair results.

That means actively seeking out missing perspectives, not just relying on what’s already available.

Bias-Aware Training Techniques

Next is applying bias-aware training techniques. This could involve reweighting certain data points to balance representation, or using algorithms specifically designed to correct skewed patterns during learning.

In some cases, it means limiting the influence of variables that might indirectly encode sensitive attributes.

Human-in-the-Loop: Adding a Safety Net

Human-in-the-loop systems also play a role, especially in high-stakes decisions. By giving people the final say in critical outcomes, whether that’s approving a mortgage or diagnosing a patient, we add a safeguard against blind algorithmic errors.

Transparent Documentation Builds Trust

Finally, transparent documentation is essential. Teams should clearly outline how an AI was trained, what data it used, and where its limitations lie.

This isn’t just about compliance, it’s about building trust. Because when users understand both the strengths and weaknesses of a system, they’re better equipped to judge when and how to rely on it.

Regulation, Ethics, and Governance: Setting the Rules for AI

The conversation about AI bias isn’t happening in a vacuum, governments, tech companies, and watchdog groups are all scrambling to define rules of the road.

Global Regulations Shaping AI Fairness

In the European Union, the EU AI Act is setting the tone with risk-based classifications and strict transparency requirements.

In the U.S., the proposed AI Bill of Rights outlines principles for fairness, explainability, and privacy, while laws like the GDPR already give individuals some power over how their data is used.

Why Explainability Matters for Compliance

Regulation matters because it forces organizations to think beyond technical performance. Laws that demand explainability, being able to show how a decision was reached, are especially important in tackling AI bias.

If a company can’t explain why its hiring AI consistently prefers one type of candidate, that’s a red flag not just ethically, but legally.

Ethics Boards and Open-Source Communities

Ethics boards and open-source communities are also stepping in. Independent oversight can spot bias before it becomes a PR disaster or, worse, harms real people.

Meanwhile, collaborative projects share tools, best-case datasets, and fairness guidelines to help level the playing field for smaller developers who may not have massive compliance teams.

Governance as a Cultural Standard

But governance isn’t only about rules and penalties, it’s about setting cultural expectations. The more we normalize the idea that fairness and safety are core design principles, the less AI bias will slip through unnoticed.

Should You Trust AI? How to Make That Call

Trusting AI isn’t a yes-or-no question. It’s a bit like trusting a new coworker, you size them up based on transparency, past performance, and whether you can double-check their work. When it comes to AI, the same logic applies.

Transparency: Seeing Behind the Curtain

First, look at transparency. Does the organization deploying the AI openly share how it works, what data it uses, and how it addresses AI bias? If all you get is a glossy marketing page, be skeptical.

Intent: Who Really Benefits?

Next is intent. What’s the AI actually designed to do, and who benefits most from its use? An AI that recommends music has a much lower trust threshold than one deciding parole eligibility.

Track Record: Proof in the Results

The track record also matters. Has the system been independently tested for fairness and accuracy? Have results been published, and if so, do they show improvements over time?

If the history is opaque or peppered with controversies, that’s a signal to dig deeper.

Human Oversight: The Final Safety Check

Finally, human oversight is non-negotiable for high-impact decisions. Even the best-trained AI can make mistakes, or reflect subtle bias in ways that only a person might catch.

Systems that combine automation with human review strike a better balance between efficiency and fairness.

Trust Earned Through Addressing Bias

In the end, trusting AI isn’t about blind faith, it’s about knowing the conditions under which it’s earned.

And when AI bias is addressed head-on rather than swept aside, that trust becomes a whole lot easier to give.

Keeping AI Honest Starts With Us

We’ve seen how subtle flaws in data, design, and deployment can quietly bend AI decisions and why fairness is only part of the safety equation. Tackling AI bias means addressing both the technical shortcomings and the deeper human values built into these systems.

Fair and trustworthy AI doesn’t happen by accident. It’s the result of deliberate choices, constant vigilance, and the courage to question even the tools we rely on most.

So next time an AI system plays a role in your future, whether it’s approving a loan, screening a job application, or diagnosing a health condition, ask yourself: Who trained it? Whose values shaped it? And most importantly, is anyone truly holding it accountable?

Comments